On August 27, 2025, the Embedded LLM team hosted the vLLM Singapore Meetup 2025 at SGInnovate. Unlike the inaugural full-dayDeveloper Day earlier this year, this was a more casual, compact evening session designed to keep the momentum alive, foster community spirit, andgive space for deeper conversations in a smaller setting.

With around 50 attendees, the meetup offered a fun, interactive environmentwhere developers, engineers, and researchers could exchange ideas whileenjoying dinner and networking.

🔑 Key Highlights

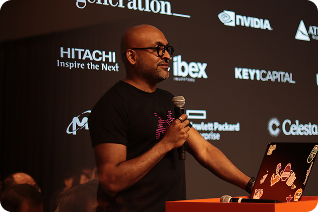

Introduction to vLLM & vLLM v1 Development

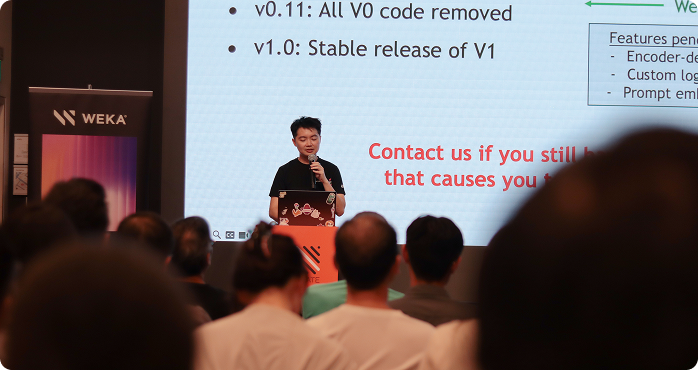

A major vLLM contributor reflected on the journey to vLLM v1, sharing behind-the-scenes insights into its architecture and the lessons learned from building one of the most widely adopted LLM inference engines.

Optimizing vLLM Inference on AMD GPUs

An AMD Senior Product Manager shared hands-on techniques for tuning vLLM on AMD Data Center GPUs, covering both performance best practices and production deployment strategies.

WEKA’s Augmented Memory Grid (AMG)

The WEKA team introduced Augmented Memory Grid (AMG), a persistent KV cache layer built on GPUDirect Storage (GDS). This innovation showcased how advanced storage-compute integration can unlock higher throughput for large-scale inference.

Deploying AudioLLM with Ray

The MERaLION team walked participants through deploying AudioLLM on Ray, with a focus on autoscaling and load balancing strategies for real-world multimodal workloads.

While smaller in scale, this meetup played a critical role in sustaining the energy of the vLLM Asia community.

✨ Looking Ahead

The August session proved that smaller, focused meetups can be just as impactful as large-scale events. By keeping things casual and community-driven, the vLLM Asia Developer Meetup continues to strengthen ties among contributors, users, and partners.

We’re excited to see the community grow stronger—and this is just another step in a journey of collaboration and momentum-building toward the future of efficient AI inference. 🚀